Recent Articles

How To Recover Overwritten Files

The Snowflake Data Breach: A Comprehensive Overview

Mac Not Recognizing External Hard Drive: Quick Fix Solutions

How Multi-Cloud Backup Solutions Can Prevent Data Disasters

Capibara Ransomware: What is it & How to Remove

What Should a Company Do After a Data Breach: The Ticketmaster Incident

Secles Ransomware: Removal Guide

What To Do When Your Chromebook Freezes

How to Create Hyper-V Backup

What Is The Best Data Recovery Software For PC

I think there's an issue with my storage device, but I'm not sure Start a free evaluation →

I need help getting my data back right now Call now (800) 972-3282

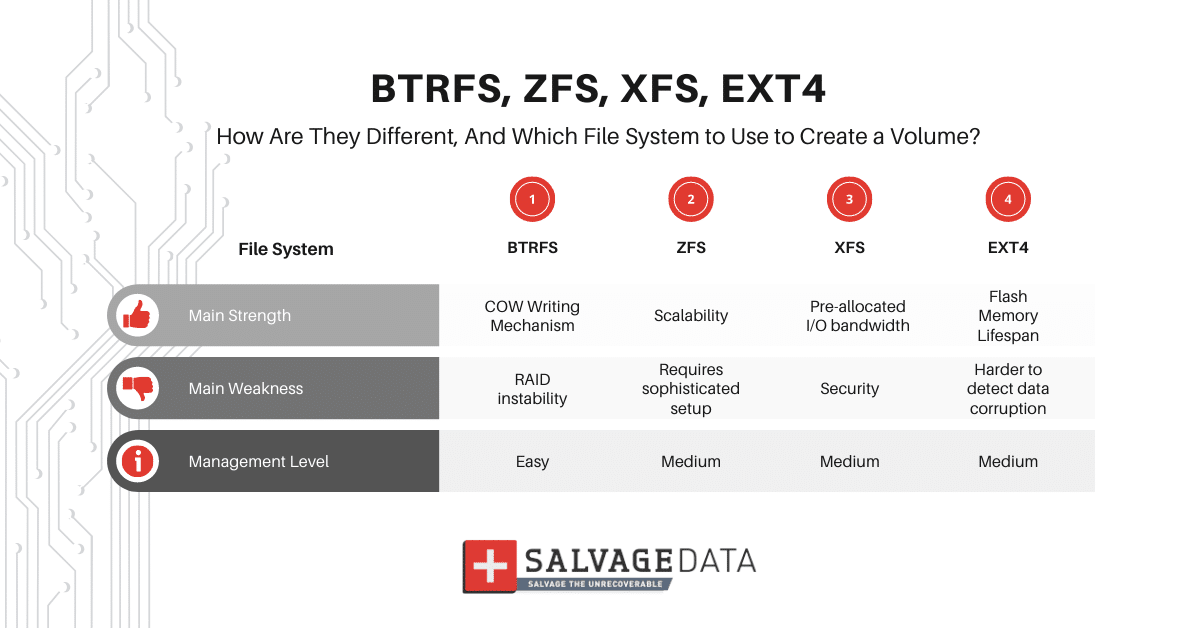

Which is the winner in a ZFS vs BTRFS scenario? Which one brings the best performance in an EXT4 vs XFS standoff?

Truth is, each ZFS, BTRFS, XFS, or EXT4 file system – to only name the most popular ones – has pros and cons.

Whether for enterprise data centers or personal purposes, choosing the best file system will depend on the amount of data and setup requirements.

To help your decision-making, in this article we will check on ZFS, BTRFS, XFS, and EXT4 differences and investigate what’s the best each can do in implementations.

What is a File System and Why it Matters

The term file system refers to the methods and structures that your operating system (OS) applies to manage how your data is stored, organized, and retrieved on a storage disk.

A file system comprises internal operations such as file naming, metadata, directories, folders, access rules, and privileges.

Storage devices are intended to merely hold lots of bits; they have no notion of files, like ZFS, BTRFS, XFS, and EXT4. Meaning that otherwise, data on a storage medium would be nothing but one large body of information.

A file system works similarly to the Table of Contents in a book: it allows your files to be broken up into chunks and stored across many blocks.

Within this metaphor, a file system greatly simplifies data management and access. Simply put, if you alter a chapter or move it somewhere else, you must update the Table of Contents, or the pages won’t match.

BTRFS, ZFS, XFS, and EXT4 File Systems – Complete Comparison

Windows users don’t have much of a choice regarding a file system. Its OS comes with only one by default (mostly it’s NTFS, FAT 32, or HFS).

On the other hand, for Linux/Unix-based devices, it might be a bit of a challenge choosing one among many options.

We’ll go over each file system in more detail ahead. But for the purpose of a handy round-up, consider our top picks below:

BEST FOR ENTERPRISES:

BTRFS is great for large companies that need a handy file system that is easy to manage; good for technologies and projects where high fault tolerance is not required.

BEST FOR MAINFRAMES:

For the most part, ZFS is intended to work with Sun (now Oracle) products, which are mainframes, clustered server environments, supercomputers, etc. Consequently, some of the benefits offered by ZFS won’t work for small businesses and private users.

BEST FOR PERSONAL PROJECTS:

Despite some capacity limitations, EXT4 makes it a very reliable and robust system to work with. Given that, EXT4 is the best fit for SOHO (Small Office/Home Office) needs and projects requiring stable performance.

BEST FOR LARGE VOLUME OF DATA:

XFS can be exceptionally helpful where large files are involved: huge data storages, large-scale scientific or bloody enterprise projects, etc.

BTRFS

B-tree file system, or BTRFS, is a file system based on the copy-on-write (COW) mechanism.

This implies that, as you modify a file, the file system won’t overwrite the existing data on the drive with newer information.

Instead, the newer data is written elsewhere. Once the write operation is over, the file system simply points to the newer data blocks (with the old information getting recycled over time).

COW also prevents issues like partial writes, which can take place due to power failure or kernel panic, and potentially corrupt your entire file system. With COW in place, a write has either happened or not happened, there’s no in-between.

BTRFS was originally designed to address the lack of pooling, checksums, snapshots, and integral multi-device spanning in Linux file systems.

The BTRFS file system focus on fault tolerance and repair advanced features implementation, such as:

- subvolumes;

- self-healing;

- online volume growth and shrinking;

- file compression;

- defragmentation;

- deduplication (it ensures that only one copy of duplicated data will be written into the disk).

Finally, BTRFS is easier to administer and manage on small systems compared to other options.

On the other hand, the system is still considered to be quite unstable and is known for issues associated with RAID implementation.

When comparing BTRFS vs ZFS, the first offers much less redundancy compared to the latter.

ZFS

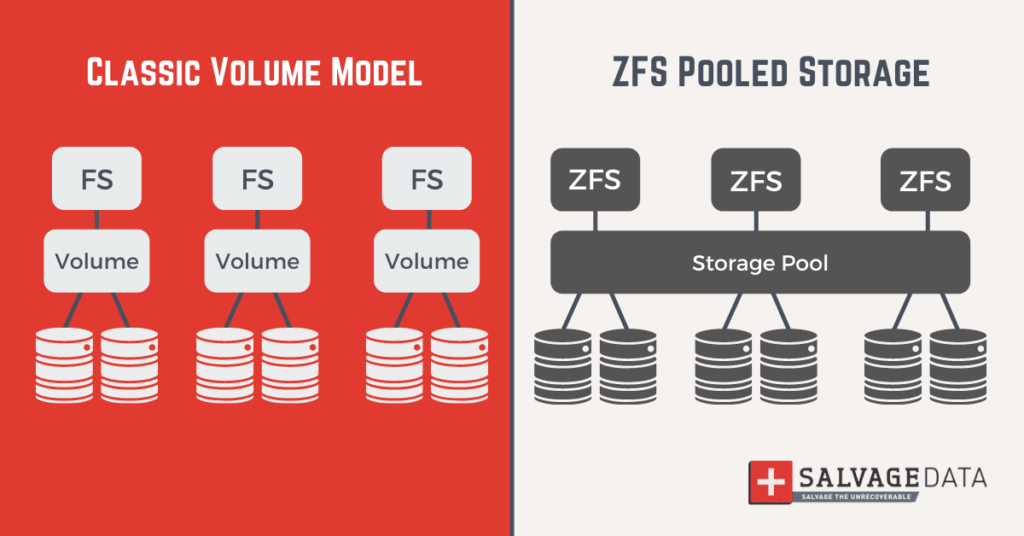

ZFS (short for Zettabyte File System) is fundamentally different in this arena for it goes beyond basic file system functionality, being able to serve as both LVM and RAID in one package.

Combining the file system and volume manager roles, ZFS allows you to add additional storage devices to the current system and immediately acquire new space on all existing file systems in that pool.

Here’s a list of the top ZFS advantages:

- Great scalability and support for nearly unlimited (up to 1 billion terabytes) data and metadata storage capacity;

- Extensive protection from data corruption as compared to other file systems — which, however, is less important for most home NAS storages for the risks ZFS safeguards against are very small;

- Efficient data compression, snapshots, and copy-on-write clones;

- Continuous integrity checking and automatic repair, significantly greater redundancy when you compare ZFS vs BTRFS.

Along with this, ZFS has its drawbacks:

- Plenty of its processes rely upon RAM, which is why ZFS takes up a lot of it;

- ZFS requires a really powerful environment (computer or server resources, that is) to run at sufficient speed;

- ZFS is not the best option for working with microservice architectures and weak hardware.

XFS

Extents File System, or XFS, is a 64-bit, high-performance journaling file system that comes as default for the RHEL family.

There are plenty of benefits for choosing XFS as a file system:

- XFS works extremely well with large files;

- XFS is known for its robustness and speed;

- XFS is particularly proficient at parallel input/output (I/O) operations due to its design, which is based on allocation groups;

- XFS provides excellent scalability of I/O threads, file system bandwidth, and size of files and of the file system itself when spanning multiple physical storage devices;

- XFS provides data consistency by using metadata logging and maintaining write barriers;

- XFS enables allocating space across extents with data structures stored in B-trees also improves overall file system performance, especially when dealing with large files;

- Delayed allocation with XFS helps prevent file system fragmentation, while online defragmentation is also supported;

- A unique feature of XFS is that I/O bandwidth is pre-allocated at a predetermined rate, which is suitable for many real-time applications.

- When comparing XFS vs EXT4, XFS also offers unlimited inode allocation, advanced allocation hinting (in case you need it), and, in recent versions, reflink support.

Among the drawbacks of the XFS file system is a serious lack of security against silent disk failures.

XFS is also deficient against ‘bit rot’, which causes a nearly complete inability to recover files in case of data loss.

Another disadvantage is a high sensitivity to large numbers of small files.

EXT4

The acronym “EXT” refers to Linux’s original extended file system created as early as 1992.

Being the first to use a virtual file system (VFS) switch, it allowed Linux to support multiple file systems at the same time on the same system.

Since then, Linux has released three updates: ext2, ext3, and EXT4, which come by default on the Linux system today.

The EXT4 main benefits are:

- Able to handle larger files and volumes than its evolutionary predecessors;

- EXT4 also extends flash memory lifespan through delayed allocation, which, in turn, improves performance and reduces fragmentation by effectively allocating bigger amounts of data at a time;

- Useful features that greatly increase reliability and fault-tolerance of the system like journaling (a system of logging changes to reduce file corruption);

- Persistent pre-allocation, journal, and metadata checksumming;

- Faster file-system checking;

- Unlimited number of subdirectories.

However, when we review EXT4 vs BTRFS, here’s the downside: BTRFS has disk and volume management built-in, while EXT4 is a “pure filesystem”.

If you have multiple disks — and therefore parity or redundancy from which corrupted data can theoretically be recovered — EXT4 has no way of knowing that, even less using it to your advantage.

Besides, EXT4 provides a limited capacity to operate modern loads of data, which is why it’s considered somewhat outdated these days.

File System Recovery

It’s true that some file system recovery applications may be good at fixing minor logical errors or volume corruption issues.

However, diagnosing the problem itself can be very tricky. Not to mention the following recovery process, which requires lots of expertise and technical mastery to be involved.

To prevent the situation from getting worse (think of permanently losing your crucial files), we recommend you contact a credible data recovery team.

Whether it’s a physical problem that you’ve encountered — like damage to your storage device — or a logical issue caused by your file system failures, at SalvageData we have all the necessary equipment and certified experience to eliminate it in the fastest and safest manner possible.

Contact us 24/7 for a free examination of your case, and let the professionals do the rest.